I originally suggested replacing my sister's ageing (power-hungry, obstreperous, recalcitrant) Core2-based server with a Raspberry Pi and a bunch of USB disks as a bit of a joke. A few minutes later, I had convinced myself it was a fantastic idea.

Sure, the ol' Intel-based machine lived in a tower that had 6 drives (mostly 500GB, some 1TB), a decent (at the time) nVidia GPU, two cores, 6GB RAM and GbE -- but in practice very little of that power was actually properly used. Instead the thing sat idle most of the time, media center activities were infrequent and placed little demand on the machine. Large file transfers weren't common anymore - instead just trickling changes between a few remote machines (thereby constricted by a residential ADSL pipe).

The maths of just the cap-ex is pretty compelling. In local currency a Model B Pi is about A$50, a decent Pi-friendly hub $30, and 3TB USB drives are $120.

But the power consumption aspect is the really interesting bit -- I expect it will pay for itself (compared to the ol' mini-tower) in 6 months of having the old box turned off. However, electricity in AU is probably more expensive than most other places around the world. And of course there are other ways you can ameliorate the massive power costs of a cranky old machine with a comparably ineffecient (but once powerful) GPU, and a handful of 5-yo drives with a combined capacity of just 5TB (and RAID-presented capacity of 3.5TB).

However, something that takes up a relatively tiny amount of space, produces almost no heat, draws a modest amount of power, and is especially easy for me to manage remotely has massive appeal. A previous hard disk failure in the tower while I'd been living overseas had caused much consternation when my not particularly hardware-happy sister had to lift the lid on the box and find a drive based on its serial number, extract it from its bay and then put a new one in.

Use case and expectations

The current (Intel tower) box is used for a couple of purposes:

- media center -- running KDE, using VLC for video, and Clementine for audio

- storage of our shared photography collection -- about 70,000 images / 250GB

- gitolite

- spare machine for my sister to use if her desktop suffers a hiccup

- running a few VM's including our old wiki

- backup repository for our various desktops and laptops

A couple of things stand out there -- running VM's isn't something I can (or would want to) do on a RPi, but I was already in the process of migrating their functionality up to a pair of machines in Digital Ocean and Rackspace.

The other is the 'spare desktop' facility, which is only a small problem insofar as the Pi wouldn't be happy running a full KDE suite. This is no biggy -- the bulk of the requirements will be serviced by just having a decent browser available on the Pi, and setting up a cold-spare old desktop for emergencies (perhaps the old server will get pulled into service). This gives a second local backup option also.

Finally, because there's data on this system that I'd rather wasn't easily accessible to any idiot that might pinch the drives, I need the whole thing encrypted. This will involve someone typing a password in at some point during the boot process, but this is a small cost, especially as this system is likely to be on all the time, and the password can be entered remotely via SSH. I'll use a lightweight algorithm, as the RPi isn't burdened with surplus CPU grunt, and I expect any bad guy h4x0r would only spend an hour or two trying to divine the contents of the drives before giving up and formatting them to NTFS.

The configuration process

My machines are almost exclusively managed by Salt -- but the CLI equivalence to provide requisites is:

apt-get install cryptsetup cryptmount hdparm lvm2 mdadm

I plugged the drives in one at a time, monitoring syslog and /proc/partitions to ensure I could identify each drive, and write a small label that then got stuck to the respective case. Obviously USB drives don't lend themselves to consistent naming of /dev/sd? on subsequent boots.

Now, there's a couple of ways you can achieve a functionally identical end-result -- combining RAID with crypt with logical volumes -- but I prefer to run mdadm to manage software RAID at the lowest level, then crypt the resulting volume, then on top of that set up logical volumes within that crypted meta-device.

The drives I bought came pre-partitioned, albeit formatted to NTFS, so the next step was:

mdadm --create /dev/md0 --level=5 --raid-devices=3 --force \

/dev/disk/by-id/wwn-0x50014ee603488d0f-part1 \

/dev/disk/by-id/wwn-0x50014ee603471402-part1 \

/dev/disk/by-id/wwn-0x50014ee6034139ab-part1

The --force here tells mdadm to create a 3-disk RAID5 array from the get-go. Without that parameter mdadm will try to create a degraded array with two drives, then add the third drive in later. I think it's comparable in performance, but prefer to know earlier rather than later that all drives are okay (or not).

And yes, if I'd used /dev/sd? names, mdadm would still track them sanely using its internal UUIDs, but I just like to be tidy.

You can see for yourself when you run mdadm --detail --scan, which you also need to do to populate your mdadm conf file:

mdadm --detail --scan >> /etc/mdadm/mdadm.conf

Note that this assumes the Debian GNU/Linux location of the mdadm.conf

Once the mdadm process is under way you can start setting things up, even though the RAID device is some days (literally) away from being synchronised:

ellie:~# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc1[2] sdb1[1] sda1[0]

5860262912 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

[>....................] resync = 0.5% (15151380/2930131456) finish=6215.8min speed=7815K/sec

unused devices: <none>

Setting up crypto and some volume groups on the RAID meta-device is straightforward:

cryptsetup --cipher aes-cbc-essiv:sha256 --key-size 128 luksFormat /dev/md0

cryptsetup luksOpen /dev/md0 phat

pvcreate /dev/mapper/phat

vgcreate vg_phat /dev/mapper/phat

I wanted to go for Blowfish, but it's not natively present on Raspbian, and I quickly lost interest in working that one out, so opted for the allegedly comparably snappy aes, with a key size of 128 bits -- plenty good enough for my requirements (my primary use case is to prevent anyone who pinches my machine to find any private data within the couple of hours they're likely to bother trying prior to just formatting the drives).

A single pv/vg makes sense here -- it's close to 6TB of presented storage, and it's likely that there'll only be two or three Logical Volumes configured.

ellie:~# pvs

PV VG Fmt Attr PSize PFree

/dev/mapper/phat vg_phat lvm2 a-- 5.46t 5.46t

ellie:~# vgs

VG #PV #LV #SN Attr VSize VFree

vg_phat 1 0 0 wz--n- 5.46t 5.46t

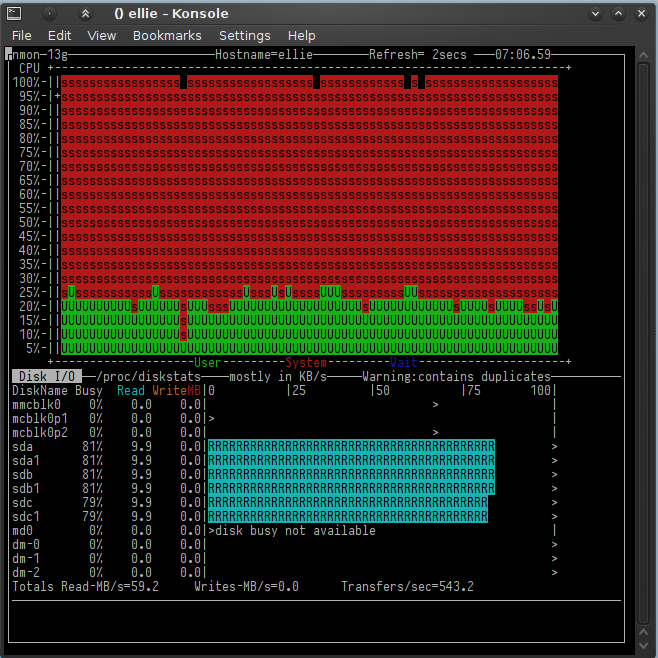

Even though the poor little thing was properly shitting itself . . .

nmon gronking

nmon gronking

. . . the formatting of reiserfs (yeah, I'm still a bit of a fan, at least until I can move to btrfs) was as impressively speedy as ever.

lvcreate --name home --size 500G vg_phat

Logical volume "home" created

lvcreate --name pub --size 3000G vg_phat

Logical volume "pub" created

mkfs.reiserfs -l home /dev/mapper/vg_phat-home

mkfs.reiserfs -l pub /dev/mapper/vg_phat-pub

I thought it more polite to wait for the RAID device to sync before trying to populate the thing with actual data.

Performance and usability considerations

I've only just started building this, so the jury is out on how usable it's going to be for the range of use cases I have in mind.

Running hdparm -t /dev/md0 while the array was assembling itself gave consistent results around the 38 MB/s range. I anticipate slightly better performance once the build isn't chewing up so much of the CPU and bandwidth, however 38MB/s is plenty for our purposes, and if my maths is right, 55-60MB/s is about the theoretical best you can achieve across USB2 in any case.

The content of /proc/mdstat makes it clear that the default raid rebuild speed limits weren't an issue. Check them on your system, and note that the max of 200,000 seems common in Debian 7 / Linux 3.9 era.

cat /proc/sys/dev/raid/speed_limit_min

cat /proc/sys/dev/raid/speed_limit_max

I reason that if it turns out to be necessary, I'm happy to have a small staging drive (SSD) attached to the USB hub also, or just some of the spare 10GB on the SD card, though the double-shuffling would be a bit of a pain. And, as above, it looks like the RAID-5 to mag media is going to provide sufficient performance when used directly -- from what I can tell there'll be plenty of capacity to stream in even HD mkv files at 1GB / hour bitrate, which you'd naturally feel would be okay anyway across USB-2 from SATA-2 disks.

I'm undecided on whether to get a fourth disk into the array, even as a cold-spare, but suspect that if/when a drive fails, the ~5 days of risk exposure is something we can wear. The data on this system are important, but most of it, at least all the important stuff, is backed up and shared between a few of us in remote locations and on multiple machines.

In the event of a disk failure, I'd experiment with putting the new (blank) drive directly into the spare USB socket of the Pi -- I'm confident the big performance problem right now is that all three drives are coming into the Pi via a single USB channel. While I expect the Pi has all kinds of internal bottlenecks with its USB sub-system, I'd hope that just getting the busier drive onto its own channel could provide some small benefit. I did try flipping one drive during the build process onto a USB port on the Pi itself but saw no change in raid assembly performance (which kind of matched my expectations and understanding of what's involved in assembling RAID5).