I am in the process of writing up some benchmarking analytics for my QNAP 569L NAS -- but while enjoying that journey I discovered several challenges with NFS and iSCSI that are surprising given the professional nature of these appliances. Specifically that as sold the appliance isn't fit for purpose. After several days of experimenting with different options in an attempt to get around fatal errors occurring on the appliance, I have finally got my QNAP successfully serving a handful of iSCSI LUNs to an ESXi machine without crashing. On the downside it required installation of beta firmware -- neither encouraging or comforting.

Apparently the Q in QNAP stands for Quality.

So it's unsettling that there's plenty of people on the QNAP forums having substantively the same problem -- using iSCSI causes their QNAP to shit itself -- but no formal explanations, workarounds, or even statements on the subject.

In QNAP's defence, they haven't (yet :) removed my relatively polite observations on the subject from their forums.

On the other hand, they also haven't responded to my support query about this problem either -- I don't count the automated response two days ago asserting that they'd get back to me within 24 hours as a proper response.

2014-03 EDIT -- I received a response from QNAP support, and subsequently to-and-fro emailing the past couple of weeks. I provided system dumps and other configuration information. I am undeniably pleased to see that they're trying to investigate this problem, but I'm frustrated that I can't provide more information at this end, given that I resolved the problem by upgrading to a new (beta) firmware. (see below)

I'm worried that they aren't connecting the dots with the other people reporting these types of issues, and that they evidently don't have the capability to trace how this was fixed sometime between the 4.0 production and (current) 4.1 beta releases, either through their internal issue tracker or changelogs. I'm also concerned that they seemingly can't replicate this issue, despite other reporters having comparably simple network configurations, and using the same version of firmware (as shipped on their appliances) while talking to the QNAP from a variety of Microsoft and GNU/Linux platforms.

The symptoms

On the QNAP there are 4 LUNs under a single iSCSI Target, and these are each attached over a dedicated GbE interface to an ESXi 5.5 host.

These are mapped as block storage devices to one VM guest running Debian GNU/Linux.

If left idle, this was quite stable -- fdisk, directory scans, this kind of access was evidently tolerated by the QNAP.

But push data to it, and within 5-10 minutes (rarely longer) the QNAP 569L would simply crash and reboot.

The background

The Debian GNU/Linux guest is fronting my data for two reasons.

First, I need on-disk (QNAP) encryption that performs better than the QNAP can muster.

Second, and more importantly, I need better granularity of NFS share permissions than the QNAP can offer.

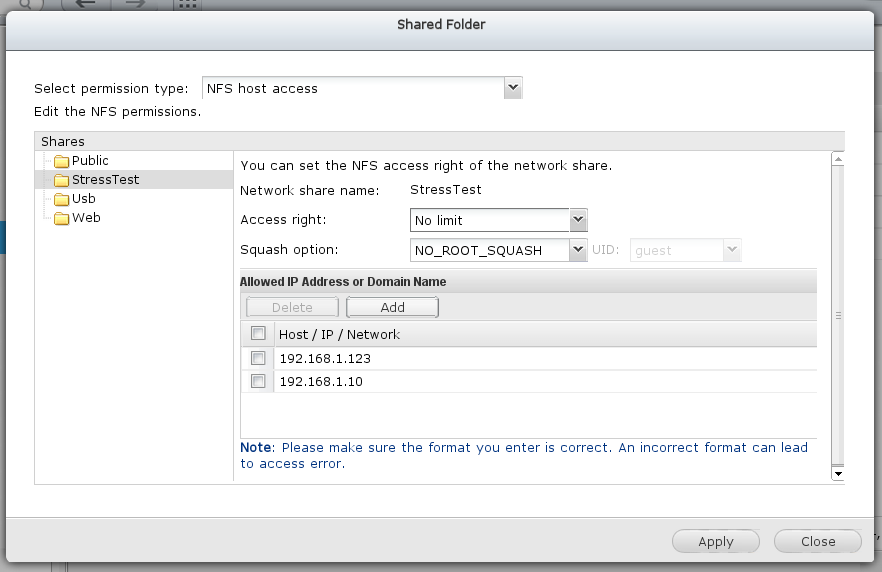

Here's the QNAP's configuration screen (this is the latest, beta firmware, btw) for NFS permissions.

QNAP NFS Configuration

QNAP NFS Configuration

The Squash option is new to the 4.1 beta firmware, as an aside.

The problem is that you can select an Access right (as shown) but can't assign that to a specific Allowed IP Address or Domain Name. The Access Right is for the share in its entirety. Which profoundly limits the usefulness of NFS shares for me (and judging by the forums, for quite a few others too).

Apparently there are ways you can work around this, and they involve going into the CLI (that's okay, I ain't afraid of no CLI) and making changes to the configuration files. And that's where it gets a bit more delicate as you do have to ensure you change the right one, as there's two sets -- one being autogenerated from the other.

Anyway, none of that is the point. The point is that I don't want to have to manage this kind of thing, because managing cruft like this means making a written note of it somewhere, and remembering to consult it, or update it, whenever I need to revisit this aspect of the device.

I'm a big fan of SaltStack for managing as much as possible of my computer configurations. This kind of under-the-hood fiddling, to persuade an ostensibly high-end and sophisticated NAS appliance to do things it should just have done out of the box in the first place, and which may end up getting reverted by a future firmware change, is anathema.

The attempts at resolution

Partly from desperation, and partly from consulting other people's desperate attempts they'd documented on the QNAP forums, I tried a few things.

Reverting the MTU from 9000 back down to 1500 (configuration done on both the ESXi and QNAP sides of that link). I'd already proven out that ESXi didn't have a problem with an MTU of 9000, and I'd transferred much data with jumbo frames to an NFS share during earlier testing.

Didn't fix the problem, in any case.

Then I tried disabling the Delayed Ack setting on the ESXi configuration (for the Storage Adapter). It seemed unlikely that this would have an effect, though the way that setting is described suggests that it may improve the quality and reliability, while slightly reducing the performance, of an iSCSI connection.

It didn't fix the problem, either.

Asking QNAP for assistance

I'd spent upwards of A$700 on this device, and I'd chosen it because it was a 'professional quality' appliance, and would mean I'd save a stack of time by not having to build and configure my own NAS box from scratch.

The facts that a) it couldn't let me have different NFS permissions for one share (an NFS feature we've been enjoying for more than two decades), and b) it rebooted consistently whenever it tried to ingest some iSCSI data ... reduces my confidence of this whole 'professional quality' label.

I raised a ticket with QNAP through their (predictably) discouraging web interface. It's discouraging for several reasons. It reads like you can only lodge a support ticket if you're based in the USA and Canada (clearly not the case, and hopefully not their intent). There are two different places that you can lodge a ticket, one of them is much more of a query form than a problem management system, with the former having a teeny-tiny little box to describe your problem. And, finally, there's no way to track the progress of your support ticket once lodged.

On the page where you can raise a support ticket you're also given a range of depressing options, as they not-so-gently encourage you to not raise a ticket in the first place.

They suggest that 'You really might want to try talking to the people you bought it form first' (I'm confident I'm not alone in knowing that the place I buy my hardware from is clueless about resolving any non-trivial problem with vendor's kit).

Then 'Perhaps check our FAQ first'. I had checked their FAQ on the off-chance that they conceded their appliances don't actually do what their advertising says they can do.

Then 'Perhaps post in our forums'. I did this also, hopping onto one of the existing threads relating to iSCSI failing.

Depressingly I still haven't heard back from QNAP support -- despite their automated response promising that they'd get back to me within 24 hours.

Alternative medicine

As I say, I noted several people claiming to have similar problems with iSCSI, and a couple of them had jumped into the deep end and swallowed a beta release of QNAP's firmware -- going up to version 4.1.0. This was packaged up on the Friday before everyone would have buggered off on their Christmas holidays, which is even more discouraging than the word beta by itself.

But these people had reported some modicum of success by going down this path, and absent any other option, plus no particular attachment to my current configuration, and indeed comfortable with having to re-import the modest quantity of data I'd already copied up to the appliance, I thought it worth a try.

Results

Very early results suggest that it's stable.

And by Very early I mean that so far I've just transferred a mere 800GB without error. Compare and contrast to the QNAP rebooting (with 4.0.5) after maybe 5-10 minutes of that (alleged) 'load'.

2014-03-01 EDIT: Actually, I've now done just shy of 3TB, and for the last 2TB of that I was running multiple copies to the NFS share.

It's too early to say whether this is a proper long term solution, and I'm concerned about what I shall do when the 4.1 branch comes out of beta (one hopes that beta releases are purely for bug fixes, not feature changes, but I can't find any confirmation of that on QNAP's site -- let alone a Changelog).

But 12+ hours of solid ingesting is a very good sign, even if not rebooting itself every 10 minutes is a pretty low bar.

I'm wary of calling this a raging success (a raging success would be a A$700 box that actually worked out of the, erhm, box). But after a day or two of fiddling, diagnosing, and trying to resolve the problem, this is as close to a success as I think I can expect.

Future purchases are definitely going to be based around building my own NAS from scratch, probably using the U-NAS 8-disk chassis and running my own OS, which I can then manage sensibly (rather than subserviently). The 170MB 'firmware' approach QNAP uses is fraught with danger and inconvenience, not least because of the strong recommendation that you don't ever go backwards in versions.

On your own managed OS you can do whatever you like in terms of versions, plus you know the versions of each sub-system, so you can do things like read Changelogs on the Linux iSCSI homepage.

Probably should have done more research, but I now feel that too much of the QNAP cost is there to cover this massive collection of applets. A bunch of 'free software' packages for media transcoders, torrent clients, VPN, MySQL, I'm sure don't cost much to package up, but there's a huge collection of CRM, CMS, Surveillance and other utilities that I'll simply never use on my QNAP.

I'm just too addicted to managing my applications with a dose of Salt over my apt.

Call me old-fashioned.